After the successive releases of Gemma, Gemini, and Gemini 1.5, Google is further expanding its footprint in artificial intelligence.

During the Cloud Next conference, Google dropped several AI “bombs,” including the official launch of Gemini 1.5 Pro, entry into the AI video model battle, the release of the code model CodeGemma, and the imminent launch of AI chips, among other intensive actions.

Gemini 1.5 Pro: Comprehensive Public Testing

Despite being overshadowed by Sora’s halo upon release, Gemini 1.5 Pro has now officially opened its doors.

Google’s Gemini 1.5 Pro can perform highly complex understanding and reasoning tasks across different modalities, and can execute more relevant problem-solving tasks in longer code blocks.

Gemini 1.5 Pro can handle 1 million tokens, which is five times more than the maximum 200K context in Claude 3! Even GPT-4 Turbo has a context of only 128K.

With its ability to understand super-long contexts, Gemini 1.5 Pro can comprehend, compare, and contrast the complete scripts of two movies to help users decide which one is worth watching. It can also translate English into a language spoken by fewer than 2,000 people in Germany.

Additionally, in a lengthy paper, it can find, understand, and explain a small chart: Gemini 1.5 Pro can extract “Table 8” from DeepMind’s Gemini 1.5 Pro paper and explain its significance.

It’s worth mentioning that Gemini 1.5 Pro can also determine whether a video is AI-generated. For example, it can watch, understand, and differentiate whether the content in OpenAI Sora’s videos is AI-generated. Gemini 1.5 Pro highlights Sora’s cat video and emphasizes key factors why it might have been generated by AI.

Upon its release in February, Gemini 1.5 Pro was not yet open to the public, with only a few users participating in the beta test. Now, the legendary Google powerhouse Gemini 1.5 Pro is available for public testing on Vertex AI, allowing everyone to try it for free.

In this official public testing announcement, Gemini 1.5 Pro has also added audio processing capabilities, enabling it to handle audio streams, including speech and audio from videos.

This seamlessly breaks the boundaries between text, images, audio, and video, opening the door to the analysis of multimodal files with just one click.

In a previous earnings call, Google mentioned that Gemini 1.5 Pro can transcribe, search, analyze, and ask questions about various media with just one model.

Since its release on the same day as OpenAI’s multimodal video model Sora, Gemini 1.5 Pro, which was completely overshadowed by Sora’s applause and popularity, will this public beta test finally mark its comeback?

Although there are still some limitations in seamlessly processing input information in super-long contexts, the full opening of Gemini 1.5 Pro makes native multimodal inference on massive data possible.

From now on, vast amounts of data can be comprehensively and multi-dimensionally analyzed. The model capabilities of Gemini 1.5 Pro have already achieved excellent practical results in multidimensional task data processing for individual users and enterprise users such as Sep, TBS, and Replit.

Gemini 1.5 Pro Free Trial: Analyzing Audiovisuals and Crafting Reviews Made Easy

Upgraded “Video Version” Imagen 2.0

Since being overshadowed by Sora, Google has officially entered the battle of video models. This time, Google released the upgraded “video version” Imagen 2.0 with animated images. The model can generate 4-second, 24-frame, 640p videos.

With just text prompts, Imagen 2.0 can create real-time dynamic images at a frame rate of 24 frames per second, with a resolution of 360×640 pixels and a duration of 4 seconds.

Google stated at the Next conference that Imagen 2.0 excels in processing natural landscapes, food images, and animal subjects. In a series of diverse photographic angles and actions, it ensures visual consistency across the entire sequence and is equipped with security filtering and digital watermarking technology.

At the same time, Google has also upgraded Imagen 2.0 with image editing functions, adding image repair, extension, and digital watermarking functions.

For example, removing a man from a picture is as easy as circling him, and Imagen 2.0 can automatically complete the landscape image after removing the person.

In addition, Imagen 2.0 can perform convenient operations such as enlarging the image field of view and one-click adjustments to selected images.

The new digital watermarking feature of Imagen 2.0 is powered by Google DeepMind’s SynthID. With this feature, users can generate invisible watermarks for images and videos and verify if they were generated by the model.

Code Model CodeGemma Released

Within two months from its release to public testing, Google has introduced various cutting-edge models into Vertex AI, including its own Gemini 1.0 Pro, the lightweight open-source model Gemma, and Anthropic’s Claude 3. Among them, the release of the code model CodeGemma has attracted attention.

The newly released lightweight code generation model CodeGemma adopts the same architecture as the Gemma series and is further trained on over 500 billion code tokens.

Both the pre-training version (PT) and instruction fine-tuning version (IT) of CodeGemma 7B excel in understanding natural language, have outstanding mathematical reasoning capabilities, and are comparable to other open-source models in code generation capabilities.

CodeGemma 2B is a SOTA code completion model that can quickly fill in code and generate open-ended code.

Paper link: CodeGemma Report

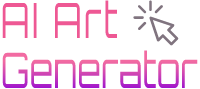

In addition, Google has also made a series of upgrades to its AI supercomputing platform, including upgraded Nvidia chips, new software, and flexible consumption models.

Among them, the Google Cloud Tensor Processing Unit (TPU) v5p is now available, and Google has partnered with Nvidia to accelerate AI development.

Now, the custom chip is fully open to cloud customers. These further enhance Google Cloud’s competitiveness in the AI field.

First Arm Architecture CPU Chip

On the hardware front, Google has also made a major move.

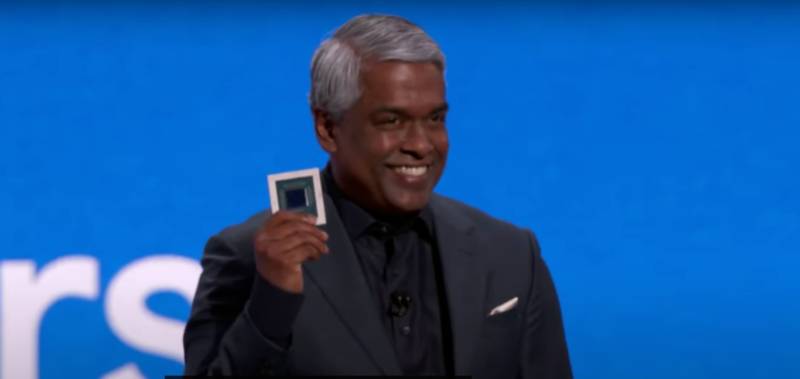

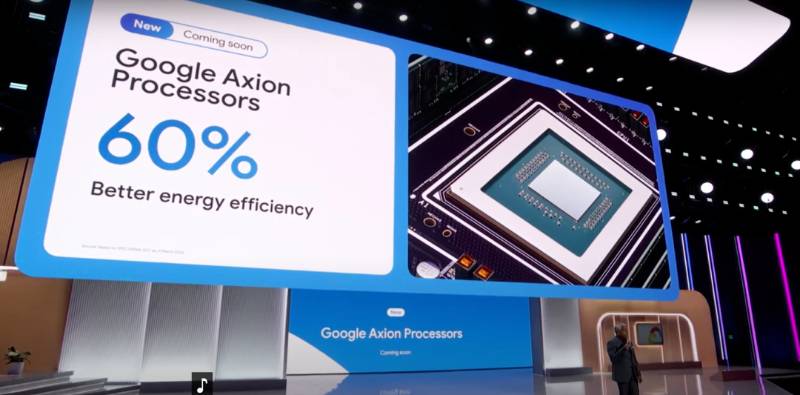

At the Next conference, Google officially announced the development of its first CPU chip based on Arm architecture, Axion. This chip is a data center-specific AI chip designed for processing tasks ranging from YouTube ads to big data analytics.

This chip continues Google’s decade-long history of chip innovation. Since the ChatGPT sparked the AI race at the end of 2022, Google has relied more on its self-developed chip strategy in an attempt to reduce dependence on external suppliers.

According to Google, the CPU processor Axion will provide better performance and energy efficiency. Compared to the latest x86 chips from Intel and AMD, it offers a 50% performance improvement and a 60% energy efficiency improvement.

Moreover, compared to the fastest current Arm-based general-purpose chips, Axion’s performance is 30% higher.

Axion AI chip means that Google has entered a competitive situation with traditional partners such as Intel and Nvidia, challenging Microsoft and Amazon. However, Amin Vahdat, Google’s vice president, stated that this move aims to expand the market rather than compete.

According to reports, Axion will help Google improve the performance of general work

loads, such as open-source databases, web and application servers, memory caches, data analytics engines, media processing, and AI training. Later this year, Axion will be available in Google Compute Engine, Google Kubernetes Engine, Dataproc, Dataflow, Cloud Batch, and other cloud services.

This AI chip belongs to the CPU category, not the GPU favored by the AI industry. CPUs like Axion can enhance the computing power required to train AI models.

CPUs have advantages in saving computing power, and compared to GPUs, CPUs help run large datasets faster and more cost-effectively. Nvidia’s Backwell chip is expected to be priced between $30,000 and $40,000.

Now, Axion chips are already providing support for YouTube ads and the Google Earth engine. Customers who previously used Arm can easily migrate to Axion without the need for re-architecture or rewriting of applications.

Subsequently, the world’s largest advertising group WPP announced a major collaboration with Google. This collaboration will use Gemini AI to help create advertisements, including voiceovers, script generation, and product image shaping.

This means that Google’s robots may ultimately produce advertisements for some of the world’s largest brands, such as Coca-Cola, L’Oréal, and Nestlé.

In addition to the multiple AI heavyweight actions at the Next conference, Google also announced that it would provide AI-driven editing tools for free to all Google Photos users.

Previously, some enhanced editing features, including the AI-driven Magic Editor, were limited to Pixel devices and paid subscribers, but now they will be freely available to all Google Photos users.

This expansion also includes Google’s Magic Eraser, which can remove unwanted items from photos; Photo Unblur, which uses machine learning to sharpen blurry photos; Portrait Lighting, which allows you to change the light source in photos after the fact, and other features.

Editing tools have always been a selling point of Google’s high-end devices, Pixel phones, and a major attraction of Google’s cloud storage subscription product Google One.

However, as more and more AI editing tools enter the market, Google has decided to offer its AI-powered photo editing features to more people for free.

These tools will roll out starting on May 15th and will take a few weeks to be available to all Google Photos users. Some hardware device requirements are also needed to use them. However, this undoubtedly marks another significant move by Google to expand its AI layout and enhance its competitiveness.

At the Google I/O 2023 conference, Google released multiple products such as large language models, AI chatbots, AI search, and AI office assistants. This Google Cloud Next conference sees Google’s AI offensive focusing on quantity.

What new moves will Google have at the upcoming 2024 I/O conference? We will continue to keep an eye on it.

>> Further Reading:

8 Key Upgrades in GPT-4 Turbo Release Revealed

GPT-4 Turbo Goes Official! Explore GPT-4 Models

4 Steps to Use Free GPT-4 Turbo with Copilot

OpenAI Unveils Voice Engine

Demystifying Red Teaming: Top 10 Questions Answered!

5-Minute Read: Global AI News Roundup